Keywordsalgorithmic marketing autonomy consumer responses cross-cultural differences fairness personalization privacy concerns trust

JEL Classification M31, M37, D91, D83, O33

Full Article

Introduction

Algorithmic marketing is reshaping consumer-firm relationships. Machine learning, recommender systems, and predictive analytics enable marketers to target and personalize offers at an unprecedented scale. These practices can help consumers receive more relevant offers with greater convenience, but can also evoke concerns about autonomy, fairness, and privacy. Algorithmic marketing's pervasive use has spurred academic interest in how people react to being profiled and targeted by algorithms.

Yet, research on algorithmic marketing is still fragmented across multiple literatures. Work on trust, fairness, privacy, cross-cultural differences, and other aspects often proceed in parallel and have rarely been integrated. This makes it challenging to take stock of the broader set of dimensions along which consumers experience and respond to algorithms. In addition, there has been much more work on the technical and objective side of algorithms, i.e., how to design them, as opposed to how social and psychological forces matter for acceptance or resistance.

This paper’s goal is to help bridge this research-practice gap by undertaking a bibliometric review of consumer responses to algorithmic marketing and a synthesis of the existing literature into an integrative conceptual framework. This study aims to answer the following question: How do consumers respond to algorithmic marketing, and what are the theoretical and practical implications of their responses?

The study’s contributions are threefold. First, the paper provides an overview and synthesis of the otherwise fragmented streams of literature by undertaking a bibliometric review. The study maps the structure and evolution of this field by identifying major research topics, most influential authors, and most impactful articles. Second, the paper develops an integrative conceptual framework that conceptualizes autonomy, trust, privacy, and cultural variation as critical factors shaping consumers’ responses to algorithms. Third, the paper derives future research avenues and managerial implications for responsibly pursuing algorithmic marketing.

The paper’s potential contributions to advancing research and practice in this area include increasing academic understanding of the social and psychological factors that underlie the complex relationships between algorithms and people.

Literature Review

Background and Scope

Algorithmic marketing has become one of the most quickly growing domains in the last decade, with almost every brand already using some sort of a data-driven system to personalize and optimize its content, advertising, pricing, and recommendations. The business performance and personalization potential of such automated systems have been a primary research and development focus. However, algorithmic marketing also prompts several more fundamental questions about the nature of consumer experiences in mediated environments and consumer perceptions, reactions, and evaluations of these environments. Increasingly, researchers in marketing, information systems, and consumer psychology have been inquiring into how users make sense of algorithmic systems and make decisions around algorithmic processes- what they trust, how they interact with them, how they think and feel about them.

The answer that research has so far provided on this question is a mixed one. While there is substantial support for the personalization benefits of AI systems in terms of customer satisfaction, engagement, and loyalty (Boerman et al., 2017), other research has identified the more concerning side of algorithmic marketing, which is its potential to invoke negative experiences and user reactions based on aversion to being monitored and nudged by AI systems (Longoni, 2019). The co-existence of both these reactions to similar stimuli has been interpreted to imply that users do not readily embrace these technologies simply based on their performance. In fact, their acceptance of algorithmic systems and personalized experiences is conditional and affected by their perceptions of these systems as being fair, transparent, and respecting their agency.

The purpose of this literature review is to provide an overview of recent empirical and conceptual research on consumer responses to algorithmic marketing, while organizing this research around the central themes of how consumers think and feel about the implications of these processes, namely trust, fairness, autonomy, privacy, and personalization. The structured approach to the literature is intended to bring more clarity to what is already well-established in the area and what the main gaps are. The review will close with an identification of some of the major blind spots in the current literature that will be motivating the conceptual and argumentative approach of the rest of the paper.

Algorithmic Marketing Landscape

Algorithmic marketing is the application of machine learning and artificial intelligence to study consumer behavior, make predictive insights, and provide precision marketing interventions at scale. Companies are employing recommendation engines, dynamic pricing, programmatic media, and automated content selection more and more in order to increase both business performance as well as customer experience. The attractiveness is in the effectiveness of these systems, as they are capable of processing enormous information much bigger than humanly possible and real-time adapting to consumer behavior (Kietzmann et al., 2018).

Current research emphasizes how algorithmic marketing has altered organizational strategy priorities. Rather than using sweeping segmentation, firms may customize offerings and communications down to the level of the individual user, diminishing the boundary between mass marketing and personalization (Huang and Rust, 2021). Streaming services and e-commerce websites have established competitive strength based on algorithmic recommendations that frame consumer decision-making and engagement (Jannach and Adomavicius, 2017).

Meanwhile, this technological shift has raised questions about opacity and accountability. Algorithms are typically “black boxes,” causing consumers to be in doubt about decision-making and companies at risk of criticism in the event that results are seen as unfair or biased (Martin and Murphy, 2017). Researchers point out that increased usage of predictive analytics in marketing is not only calling for technical optimization but also sensitive handling of consumer perceptions, as trust is key to adoption and continued usage (Yeomans et al., 2019).

Together, the research presents algorithmic marketing as both a strong facilitator of customized engagement and a potential cause of doubt among consumers. Due to this ambivalence, it is crucial to investigate how individuals react to algorithmic decisions in marketing scenarios.

Consumer Trust and Fairness

Trust sits at the center of how people react to algorithmic marketing. When a system seems competent, open about how it works, and free from bias, people are more willing to follow its suggestions and keep engaging with the brand (Dietvorst and Bartels, 2022). When it feels opaque or manipulative, trust erodes and resistance grows (Araujo et al., 2020).

Fairness matters just as much. People judge not only what the algorithm decides but how it gets there (Dietvorst and Bartels, 2022). If they believe the process treats them fairly and avoids hidden bias, they’re more likely to accept the result. If they suspect discriminatory targeting or pricing that takes advantage of them, they pull back (Langer, Ostermaier and Vogel, 2021).

Transparency helps, but it has to be the right kind. Short, clear explanations of why a recommendation appears can ease concerns. Too much technical detail overwhelms. Too little feels like a dodge. The sweet spot pairs simple language with enough clarity for people to make an informed choice (Eslami et al., 2018; Shin and Park, 2019).

The broad lesson is straightforward. Trust and fairness are not extras. If an algorithm chases accuracy alone, it can still fail in the eyes of users. Build for competence, clarity, and equity from the start, and align the experience with what people expect from a fair, reliable system.

Personalization and Relevance

Personalization is a frequent promise of algorithmic marketing. In theory, the consumption data made available at an unprecedented scale can enable hyper-relevant recommendations, ads, and offers. Studies confirm that relevance can improve customer satisfaction, engagement, and loyalty, as consumers feel recognized and understood (Boerman et al., 2017).

Personalization may not always be perceived positively, and the context is essential. On the one hand, consumers tend to accept personalization when it eases their efforts, saves time, or is rewarding, in line with literature about self-determination and utility maximization. On the other hand, consumers tend to feel discomfort and react to “too much” personalization or to situations where data use does not feel proportional to the benefits (Aguirre et al., 2015; Kokolakis, 2017). This has also been called the “personalization–privacy paradox” or P3, which has been observed with social media (Li, 2019).

Consumers also judge the degree of personalization, which can affect their experience and trust in a brand or platform. Relevant and timely algorithmic suggestions (or conversely, poorly chosen, irrelevant recommendations) influence both trust and willingness to use a given system (Jannach, Resnick and Tuzhilin, 2016). Newer research has found evidence that consumers across countries and demographics are willing to share personal information in different degrees, in exchange for different value propositions, or remain privacy-protective (Schumacher et al., 2023).

Research about personalization often points out that, although it may deepen customer engagement and relationships, over-targeting may cause push-back from consumers over perceived risks to autonomy, manipulative communication, and privacy loss. The marketer’s dilemma is then finding the “goldilocks zone” of personalization that provides value without triggering such consumer concerns.

Autonomy and Control

The perception of consumer autonomy is a critical factor in algorithmic marketing. Autonomy in this context can be defined as the degree to which consumers feel that they have a sense of personal agency when making a decision, even when an algorithm has affected the outcome in some way. In general, when a consumer does not feel in control or as though the algorithm is in control of their decision-making, they will feel less positive about a purchase and have negative attitudes about the service providing the algorithm (Yeomans, et al. 2019). On the other hand, if a consumer feels that their autonomy is respected and not “undermined” by algorithms, then they will be more accepting of these services (Custers, van der Hof and Schermer, 2016).

Perceived control is at the center of this issue, and when it is present, consumers often respond positively. For example, when consumers are allowed to change preferences, filter options, or reject recommendations, it can increase acceptance and satisfaction (Eiband, et al., 2018). In some ways, even the “illusion of control” has shown to have benefits on consumers, and giving a consumer even the illusion that they are “in charge” of a situation can reduce resistance to algorithmic marketing (Shin, 2020).

Of course, as in other aspects of consumer behavior, this effect has a “sweet spot.” While autonomy and control can increase trust in most circumstances, too many options and other means of feedback have also been shown to be cognitively demanding and in some cases, decrease usability of the service, which can have a negative impact on consumers (Pizzi, et al., 2021). This reflects some of the tension between efficiency and control in algorithmic design.

Autonomy concerns are particularly salient in situations where algorithms are used for high-stakes or emotionally laden decisions, such as in the case of financial services or health care (Castelo, Bos and Lehmann, 2019). Consumers in these domains are likely to demand not only accuracy, but also assurances that their own judgment will not be eclipsed (Castelo, Bos and Lehmann, 2019). In the context of marketing, even though the consequences for the consumer are likely to be less severe, similar considerations apply: the consumer will not welcome the feeling of being directed or ‘pushed’ to a choice that is constrained by the algorithm without their consent.

Research indicates that consumer acceptance of algorithmic marketing depends on respecting consumer autonomy while providing them with appropriate control options.

Privacy Concerns

Privacy sits near the top of what worries people about algorithmic marketing. Algorithms run on large amounts of personal data, so people ask basic questions. What did you collect, why did you collect it, where is it stored, and who can see it (Martin and Murphy, 2017). Fears about surveillance and losing anonymity are stronger in a world where clicks, locations, and habits can be tracked and used to sell to us (Malhotra, Kim and Agarwal, 2019).

At the center is a trade-off between personalization and privacy. Many people will accept data collection when the payoff is clear, immediate, and fair, like convenience or a lower price. When collection feels excessive or not truly consented to, discomfort and mistrust grow (Aguirre et al., 2015). Researchers often call this the privacy calculus, where people weigh risks against rewards before deciding to share (Culnan and Bies, 2003; Keith et al., 2016).

Transparency and control help. Plain explanations about what is collected and how it is used, paired with easy ways to adjust or revoke consent, can build trust and reduce pushback (Shin and Park, 2019). The catch is that many privacy notices are long, technical, and hard to follow, so people still walk away without real understanding (Schaub et al., 2017).

What this means for marketers is simple. Privacy shapes what people will share today and how they feel about your brand tomorrow. Ignore it and you risk lost data, broken relationships, complaints, and penalties. Treat it as both the right thing to do and smart strategy. Collect only what you need, explain it clearly, give people real choices, and stand by those choices in every part of your algorithmic marketing.

Emotional Reactions to Algorithms

In addition to rational and controlled processes, consumers also respond to algorithmic marketing on an emotional level. Emotions such as trust, comfort, anxiety, or even resentment can influence how consumers interpret and react to algorithmic interventions. Studies show that when consumers perceive algorithms as fair and accurate, they are more likely to feel reassured and engage positively with algorithmic outputs (Logg, Minson and Moore, 2019). On the other hand, perceived bias, error, or manipulation can provoke frustration and disengagement (Lee, Kim and Sundar, 2015).

Algorithmic aversion is one of the most well-documented themes. Even when algorithms provide better performance than humans, consumers often opt for human input because algorithms are viewed as impersonal, cold, or lacking in understanding of nuance (Dietvorst, Simmons and Massey, 2015). This aversion can result in resistance to marketing personalization, particularly in situations that consumers feel require empathy, creativity, or a human touch.

Conversely, some research has identified a phenomenon called algorithmic appreciation. In some cases, consumers may defer to algorithmic recommendations precisely because they are seen as objective and data-driven (Burton, Stein and Jensen, 2020). The emotional response in such cases is contingent on the level of trust in the institution implementing the algorithm and the perceived consequences of the decision.

Emotional reactions to algorithmic marketing also differ across demographic groups. Younger consumers have shown more comfort with algorithmic personalization, whereas older consumers often report higher levels of anxiety or distrust (Huang and Rust, 2021). Cultural differences also play a role, with varying perceptions of whether algorithms are helpful tools or intrusive forces (Arora, Hofacker and Khare, 2021).

In sum, emotional responses are critical to understanding consumer acceptance of algorithmic marketing. Marketers need to recognize that, in addition to addressing rational concerns about privacy and control, feelings of trust, fairness, and empathy are powerful drivers of whether consumers embrace or reject algorithmic personalization.

Cross-Cultural and Contextual Differences

People don’t respond to algorithmic marketing the same way everywhere. Culture, social norms, and local rules shape what feels acceptable or useful. In many collectivist settings, including parts of East Asia, appeals that stress shared benefits can make personalization feel more trustworthy and welcome (Schumacher et al., 2023). In more individualistic markets like the United States, people tend to guard autonomy and privacy more closely, so pushy or opaque targeting often meets resistance (Schumacher et al., 2023).

Setting matters too. When choices touch health or money, people slow down, ask where a recommendation came from, and look for signals of fairness and clarity (Longoni, Bonezzi and Morewedge, 2019). In lighter contexts such as shopping suggestions or what to watch next, many will accept tailored content if it clearly saves time or improves the experience (Aguirre et al., 2015).

Age cohorts add another layer. Many younger users grew up with personalized feeds and app ecosystems, so they often trade data for convenience without much friction (Bol, Helberger and Weert, 2018). Older users are more likely to worry about misuse, struggle with unclear controls, and opt out when things feel invasive (Huang and Rust, 2021).

Law and institutions set the baseline. The GDPR in the European Union raised awareness about data rights and nudged expectations toward clear consent and explainability (Martin and Murphy, 2017). Where protections are weaker, people may feel exposed or simply give up on trying to control what happens with their data.

The takeaway is simple. Avoid one size fits all tactics. Tailor the pitch, the level of transparency, and the control options to the culture, the stakes of the industry, and the audience you aim to reach. That is how personalization stays helpful rather than intrusive.

Research Gap

Consumer research in this field has made rapid progress, three key gaps remain. First, although numerous studies have identified how factors such as trust or personalization influence consumers’ responses to algorithmic systems, these studies are mostly in isolation. For example, to what extent have previous research identified drivers or barriers to consumer trust and/or adoption of algorithmic systems. Second, most of the existing empirical evidence remains siloed in different industries or contexts, with a paucity of studies aimed at understanding the extent to which these findings are more broadly generalizable. Finally, the implications of these consumer insights for managers and/or policymakers remain surprisingly unelaborated.

The current paper overcomes these gaps in three ways. First, it provides a critical review of the literature, identifying recent contributions. Second, it organizes the vast range of findings into a set of themes. Third, it develops a conceptual framework that captures the interplay between the broad range of consumer responses across trust, fairness, transparency, and personalization.

Conceptual Framework

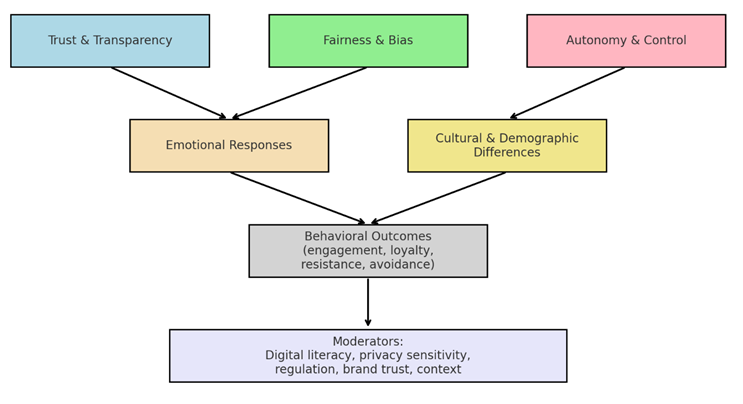

Figure 1 illustrates the conceptual framework we developed based on previous research findings. This framework takes into account four different key themes, which are autonomy and control, privacy concerns, emotional responses, and cross-cultural and contextual factors. The interaction between these four factors will shape consumers’ perceptions, values, and reactions, which will in turn lead to acceptance or resistance.

The bottom layer of the framework is the juxtaposition between personalization and perceived intrusiveness. Consumers will be exposed to algorithmic output in various contexts, and they will be comparing its perceived relevance, utility, or quality not only to their goals but also to their autonomy (Longoni, Bonezzi and Morewedge, 2019). Thus, a feeling of constrained autonomy and freedom of choice will lead to resistance, even in the presence of more convenience and personalization.

A similar logic applies to privacy concerns. The belief that algorithms lead to data misuse, surveillance, or opaqueness will decrease consumers’ trust in them and foster skepticism (Martin and Murphy, 2017). Privacy concerns will be especially intensified in situations that relate to sensitive data, such as financial services or healthcare, with the consumers demanding more transparency and accountability (Huang and Rust, 2021).

In turn, emotions can be seen as an affective lens that allows us to capture the dynamics of acceptance and resistance more broadly. Although negative emotions, such as discomfort, anxiety, or surprise, will likely be at the fore, positive affective experiences should not be overlooked. Consumers may experience delight, pleasant surprise, or gratitude when algorithms offer relevant recommendations, solve problems, or enable convenient experiences (Bol, Helberger and Weert, 2018). These emotions may also reinforce trust and acceptance.

The last factor, which will play a moderating role in all of the previously mentioned dynamics, is cross-cultural and contextual factors. For instance, individualistic cultures are likely to place more emphasis on autonomy and privacy concerns, while collectivist cultures may value communal benefits and trust in institutions more (Cho, 2022; Tuncdogan and Wubben, 2021). Contextual factors such as high- or low-stakes decision-making or the social setting may also shape consumers’ responses to algorithmic marketing in terms of both leniency or criticality.

Consumer acceptance of algorithmic marketing in the framework presented in this paper is the result of all of the factors mentioned earlier, combined with trust and perceived fairness, which serve as mediators between the input factors and acceptance. The framework, in this way, provides a way to connect these individual factors and at the same time is structured in a way that will allow it to be empirically tested in the future.

Figure 1 . Conceptual Framework of Consumer Responses to Algorithmic Marketing

Source: Author’s own work

Research Methodology

The current paper is based on bibliometric and conceptual review. The research is guided by the objectives to map the field of knowledge production, identify conceptual and thematic clusters and, on the basis of this mapping, develop an integrative framework that highlights the main research gaps at the theoretical and practical levels.

The bibliometric analysis is a common research method in marketing and management sciences due to its ability to provide a comprehensive and objective view of the intellectual structure of a research domain (Zupic and Cater, 2015). It allows identifying significant works and topics, trends in knowledge production and dissemination, and areas of fragmented, emerging, and underdeveloped research (Zupic and Cater, 2015). A conceptual review aims not only to summarize previous studies but also to synthesize patterns and make theoretical propositions (Snyder, 2019).

The data for this review were collected from three major databases: Scopus, Web of Science, and Google Scholar. These databases were selected because they provide comprehensive coverage of peer-reviewed marketing, management, and information systems literature. The search was conducted using keywords such as “algorithmic marketing,” “personalization algorithms,” “consumer trust,” “privacy,” “digital targeting,” and “automated decision-making.” To ensure both depth and currency, the search was limited to journal articles and conference proceedings published between 2013 and 2023. Books and practitioner reports were reviewed selectively to provide context but were not included in the bibliometric analysis.

The initial search returned over 300 articles. After removing duplicates and applying inclusion criteria (peer-reviewed, English language, direct relevance to algorithmic marketing or consumer response), approximately 120 articles were retained for detailed review. Each study was coded for its research context (e.g. retail, financial services, healthcare), methodology (quantitative, qualitative, or conceptual), and thematic focus (autonomy, privacy, fairness, trust, or cross-cultural dynamics).

The analysis proceeded in two stages. First, bibliometric mapping was used to trace citation networks, keyword co-occurrence, and thematic clusters. Second, a qualitative synthesis of the retained studies was carried out to highlight tensions, gaps, and emerging directions. This dual strategy allows the review to remain comprehensive while also offering original insights.

The integration of bibliometric and conceptual methods ensures that the paper not only reflects the current state of knowledge but also provides a foundation for theory-building. This methodological design underpins the development of the conceptual framework presented earlier and the discussion of contributions in the final section.

Analysis and Results

The analysis combined bibliometric mapping with thematic synthesis to uncover the intellectual structure of research on algorithmic marketing and consumer responses. This section reports the main findings in two parts: (1) bibliometric insights, and (2) thematic results.

Bibliometric Review

The bibliometric review indicates a swift increase in the volume of literature on algorithmic marketing since 2016, especially after 2019. The spike in interest aligns with the broader penetration of artificial intelligence and machine learning in digital marketing applications (Wedel and Kannan, 2016). Citation network mapping highlighted several key contributions, especially concerning consumer trust (Bleier and Eisenbeiss, 2015), privacy issues (Martin and Murphy, 2017), and fairness in algorithmic decision-making (Cowgill and Tucker, 2020).

Keyword co-occurrence analysis revealed that “personalization,” “consumer privacy,” and “algorithmic bias” are the central clusters in the literature. Secondary clusters included “cross-cultural differences,” “autonomy,” and “ethical AI.” These results confirm the literature in this area is fragmented with some strong, but fairly isolated streams of research.

Thematic Results

From the qualitative synthesis of 120 studies, five key themes emerged:

Autonomy and Control

Research consistently shows that consumers value transparency and the ability to exercise some level of control over algorithmic decision-making. Lack of perceived autonomy often leads to resistance, reduced satisfaction, and avoidance behaviors (Shin and Park, 2019).

Trust and Fairness

Trust in algorithmic systems is fragile and closely tied to perceptions of fairness. Studies indicate that when algorithms are perceived as biased or opaque, consumers are less likely to engage positively with the outcomes (Dietvorst et al., 2018). Fairness-related concerns are especially prominent in contexts such as credit scoring and recruitment.

Privacy and Data Concerns

Privacy remains one of the most researched areas, with findings showing that perceived intrusiveness strongly shapes consumer attitudes. Strikingly, even when consumers accept personalization benefits, concerns about how their data are used persist (Martin et al., 2017). This “privacy paradox” continues to be a critical tension in algorithmic marketing.

Cultural and Contextual Differences

Evidence suggests that responses to algorithmic marketing are not universal. Cultural values, regulatory environments, and sector-specific norms all shape consumer reactions. For instance, consumers in high individualism cultures exhibit stronger resistance to opaque algorithmic targeting than those in collectivist contexts (Aguirre et al., 2015).

Emerging Research Gaps

Several underdeveloped areas were identified. First, relatively few studies examine long-term consumer responses, as most focus on immediate attitudes or behaviors. Second, cross-disciplinary perspectives-such as integrating insights from human-computer interaction-are still rare. Finally, practical implications for firms are often underexplored, limiting managerial guidance.

Synthesis

The results reveal both the strengths and weaknesses of existing research. While the literature provides strong evidence of consumer concerns around autonomy, trust, and privacy, there remains limited integration across these themes. The fragmentation of research prevents a holistic understanding of how consumers navigate algorithmic marketing. This study addresses this gap by proposing a conceptual framework that integrates these dimensions and highlights their interconnections.

Discussion and Conclusion

This study set out to review and synthesis the growing body of research on consumer responses to algorithmic marketing. Through bibliometric analysis and thematic synthesis, it identified the intellectual foundations of the field and mapped the main areas of consumer concern, including autonomy, trust, privacy, and cultural variation.

This research conducted a review to understand consumer response to algorithmic marketing. This review identifies trust, fairness, personalization, autonomy, privacy, emotions and cultural context as the primary dimensions to which consumers respond in the presence of algorithmic marketing. The research also suggested that consumers do not respond to algorithmic marketing in a homogenous manner. Consumers responded to these systems in diverse ways due to cognitive, emotional, and difference factors that affected this reaction, including demographic and cultural differences.

The contribution to research and practice can be summarized as follows. The research contribution is to connect several partial studies on trust, personalization, and privacy into an overarching framework. The proposed relationships between these variables and consumer acceptance of algorithmic marketing offer a more holistic view of what factors drive people to accept or resist an algorithm's recommendations. Most previous research has focused on single topics in isolation. This paper has demonstrated how these different themes are related and how they together determine consumer responses to algorithmic marketing. It can be seen as a framework for future causal studies to test and refine, as well as an agenda for less-researched topics such as generational and cross-cultural differences.

The contribution to practice is that the results clearly show that the success or failure of algorithmic marketing depends not only on its technical correctness but also on the extent to which personalization respects consumer autonomy, transparency does not overload users with information, and privacy concerns are taken seriously. Practical implications for managers are that they should pay attention to giving users the ability to control their interaction with recommender systems, make data practices and decisions understandable to consumers, and tailor personalization to different cultures and individual preferences to minimize resistance and establish long-term trust.

The policy implications of the study can also be discussed by taking the experience of different regions with regulation and privacy into consideration. This research has provided evidence of how regional differences related to the existence of a privacy-protective legislation, such as GDPR, affected consumer reaction to algorithmic marketing. Therefore, it is also important to note that markets with a weaker regulatory environment might consider the policy implications based on research from areas with a higher level of consumer protection. This review also can be used to highlight the need for research to increase our understanding of algorithmic marketing and not only provide evidence of problems but also attempt to solve them. It is important that future research not only describes consumers’ response to algorithmic marketing but also attempts to test different interventions that might help to increase the positive reaction to this approach. For instance, the research could suggest different tools of transparency or degree of user control and the research the impact of these elements on acceptance in different conditions. Longitudinal data might also help to understand whether the consumer response to algorithmic marketing changes over time as people become more used to the experience of interacting with AI systems.

In conclusion, this review can be considered to be a significant contribution to the research related to marketing and algorithmic marketing in particular. Algorithmic marketing is a field that offers opportunities but also challenges that might be related to the trust, fairness, personalization, autonomy, privacy, emotions, and cultural context. It is of utmost importance that this understanding is built into the algorithmic marketing systems in a way that would fit different cultural and demographic groups to allow firms to benefit from this technology without facing consumer rejection.

---

Funding : This research received no external funding

Conflicts of Interest : The authors state that they have no conflicts of interest

---

Disclaimer/Publisher’s Note: The views, statements, opinions, data and information presented in all publications belong exclusively to the respective Author/s and Contributor/s, and not to Sprint Investify, the journal, and/or the editorial team. Hence, the publisher and editors disclaim responsibility for any harm and/or injury to individuals or property arising from the ideas, methodologies, propositions, instructions, or products mentioned in this content.

References

- Aguirre, E., Mahr, D., Grewal, D., de Ruyter, K. and Wetzels, M., 2015. Unraveling the personalization paradox the effect of information collection and trust-building strategies on online advertisement effectiveness. Journal of Retailing, 91(1), pp.34–49. https://doi.org/10.1016/j.jretai.2014.09.005

- Araujo, T., Helberger, N., Kruikemeier, S. and de Vreese, C.H., 2020. In AI we trust perceptions about automated decision-making by artificial intelligence. AI & Society, 35(3), pp.611–623. https://doi.org/10.1007/s00146-019-00931-w

- Bleier, A. and Eisenbeiss, M., 2015. The importance of trust for personalised online advertising. Journal of Retailing, 91(3), pp.390–409. https://doi.org/10.1016/j.jretai.2015.05.003

- Boerman, S.C., Kruikemeier, S. and Zuiderveen Borgesius, F.J., 2017. Online behavioural advertising a literature review and research agenda. Journal of Advertising, 46(3), pp.363–376. https://doi.org/10.1080/00913367.2017.1339368

- Bol, N., Helberger, N. and van Weert, J.C.M., 2018. Differences in mobile health app use a source of new digital inequalities. The Information Society, 34(3), pp.183–193. https://doi.org/10.1080/01972243.2018.1438550

- Burton, J.W., Stein, M-K. and Jensen, T.B., 2020. A systematic review of algorithm aversion. Journal of Behavioral Decision Making, 33(2), pp.220–239. https://doi.org/10.1002/bdm.2155

- Castelo, N., Bos, M.W. and Lehmann, D.R., 2019. Task-dependent algorithm aversion. Journal of Marketing Research, 56(5), pp.809–825. https://doi.org/10.1177/0022243719851788

- Custers, B., van der Hof, S. and Schermer, B., 2016. Privacy expectations of consumers in the context of smart devices. Computer Law & Security Review, 32(2), pp.272–289

- Dietvorst, B.J. and Bartels, D.M., 2022. Consumers object to algorithms making morally relevant tradeoffs because of algorithms’ consequentialist decision strategies. Journal of Consumer Psychology, 32(3), pp.406–424. https://doi.org/10.1002/jcpy.1266

- Dietvorst, B.J., Simmons, J.P. and Massey, C., 2015. Algorithm aversion people erroneously avoid algorithms after seeing them err. Journal of Experimental Psychology General, 144(1), pp.114–126. https://doi.org/10.1037/xge0000033

- Dietvorst, B.J., Simmons, J.P. and Massey, C., 2018. Overcoming algorithm aversion people will use imperfect algorithms if they can slightly modify them. Management Science, 64(3), pp.1155–1170. https://doi.org/10.1287/mnsc.2016.2643

- Eiband, M., Schneider, H., Bilandzic, M., Fazekas-Con, K., Haug, M. and Hussmann, H., 2018. Bringing transparency design into practice. In: Proceedings of the 23rd International Conference on Intelligent User Interfaces (IUI 2018). ACM, pp.211–223. https://doi.org/10.1145/3172944.3172961

- Eslami, M., Krishna Kumaran, S.R., Sandvig, C. and Karahalios, K., 2018. Communicating algorithmic process in online behavioural advertising. In: Proceedings of the 2018 CHI Conference on Human Factors in Computing Systems. ACM, Paper 432, pp.1–13. https://doi.org/10.1145/3173574.3173840

- Gómez-Uribe, C.A. and Hunt, N., 2015. The Netflix recommender system algorithms, business value, and innovation. ACM Transactions on Management Information Systems, 6(4), Article 13, pp.1–19.

- Huang, M-H. and Rust, R.T., 2021. A strategic framework for artificial intelligence in marketing. Journal of the Academy of Marketing Science, 49(1), pp.30–50. https://doi.org/10.1007/s11747-020-00749-9

- Jannach, D. and Adomavicius, G., 2016. Recommendations with a purpose. In: Proceedings of the 10th ACM Conference on Recommender Systems, pp.7–10. https://doi.org/10.1145/2959100.2959186

- Jannach, D., Resnick, P., Tuzhilin, A. and Zanker, M., 2016. Recommender systems beyond matrix completion. Communications of the ACM, 59(11), pp.94–102. https://doi.org/10.1145/2891406

- Keith, M.J., Thompson, S.C., Hale, J. and Lowry, P.B., 2016. Try it on without risk the fit between privacy policy and website design as a factor in online trust. AIS Transactions on Human-Computer Interaction, 8(2), pp.48–69.

- Kietzmann, J., Paschen, J. and Treen, E., 2018. Artificial intelligence in advertising how marketers can leverage AI along the consumer journey. Journal of Advertising Research, 58(3), pp.263–267. https://doi.org/10.2501/JAR-2018-035

- Kokolakis, S., 2017. Privacy attitudes and privacy behaviour a review of current research on the privacy paradox phenomenon. Computers & Security, 64, pp.122–134. https://doi.org/10.1016/j.cose.2015.07.002

- Langer, M., Ostermaier, A. and Vogel, T., 2021. Toward fairness in algorithmic decision making. Computers in Human Behavior, 120, 106751. https://doi.org/10.1016/j.chb.2021.106751

- Lee, S., Kim, K.J. and Sundar, S.S., 2015. Customization in location-based advertising effects of tailoring source, locational congruity, and product involvement on ad attitudes. Computers in Human Behavior, 51, pp.336–343. https://doi.org/10.1016/j.chb.2015.04.030

- Logg, J.M., Minson, J.A. and Moore, D.A., 2019. Algorithm appreciation people prefer algorithmic to human judgment. Organizational Behavior and Human Decision Processes, 151, pp.90–103. https://doi.org/10.1016/j.obhdp.2018.12.005

- Longoni, C., Bonezzi, A. and Morewedge, C.K., 2019. Resistance to medical artificial intelligence. Journal of Consumer Research, 46(4), pp.629–650. https://doi.org/10.1093/jcr/ucz013

- Martin, K.D. and Murphy, P.E., 2017. The role of data privacy in marketing. Journal of the Academy of Marketing Science, 45(2), pp.135–155. https://doi.org/10.1007/s11747-016-0495-4

- Martin, K.D., Borah, A. and Palmatier, R.W., 2017. Data privacy effects on customer and firm performance. Journal of Marketing, 81(1), pp.36–58. https://doi.org/10.1509/jm.15.0497

- Pizzi, G., Scarpi, D. and Pantano, E., 2021. Artificial intelligence and marketing a systematic review. International Journal of Consumer Studies, 45(5), pp.710–736. https://doi.org/10.1111/ijcs.12695

- Schaub, F., Balebako, R., Durity, A.L. and Cranor, L.F., 2017. A design space for effective privacy notices. Proceedings on Privacy Enhancing Technologies, 2017(1), pp.17–36. https://doi.org/10.1515/popets-2017-0008

- Schumacher, C., Eggers, F., Verhoef, P.C. and Maas, P., 2023. The effects of cultural differences on consumers’ willingness to share personal information evidence from four countries. Journal of Interactive Marketing, 58, pp.18–37. https://doi.org/10.1177/10949968221136555

- Schumacher, E.G., Eggers, F. and Verhoef, P.C., 2023. Cultural differences in consumers’ willingness to share personal information evidence from four countries. Journal of Interactive Marketing, 63, pp.1–19. https://doi.org/10.1016/j.intmar.2023.03.001

- Shin, D., 2020. User perceptions of algorithmic decisions perceptual evaluation of fairness, accountability, transparency, and explainability. Journal of Broadcasting & Electronic Media, 64(4), pp.541–565. https://doi.org/10.1080/08838151.2020.1843357

- Shin, D. and Park, Y.J., 2019. Role of fairness, accountability, and transparency in algorithmic affordance unlocking the black box of algorithmic decision making. Computers in Human Behavior, 98, pp.277–284. https://doi.org/10.1016/j.chb.2019.04.019

- Wedel, M. and Kannan, P.K., 2016. Marketing analytics for data-rich environments. Journal of Marketing, 80(6), pp.97–121. https://doi.org/10.1509/jm.15.0413

- Yeomans, M., Shah, A., Mullainathan, S. and Kleinberg, J., 2019. Making sense of recommendations. Journal of Behavioral Decision Making, 32(4), pp.403–414. https://doi.org/10.1002/bdm.2118

Article Rights and License

© 2025 The Author. Published by Sprint Investify. ISSN 2359-7712. This article is licensed under a Creative Commons Attribution 4.0 International License.